deepC

vendor independent TinyML deep learning library, compiler and inference framework microcomputers and micro-controllers

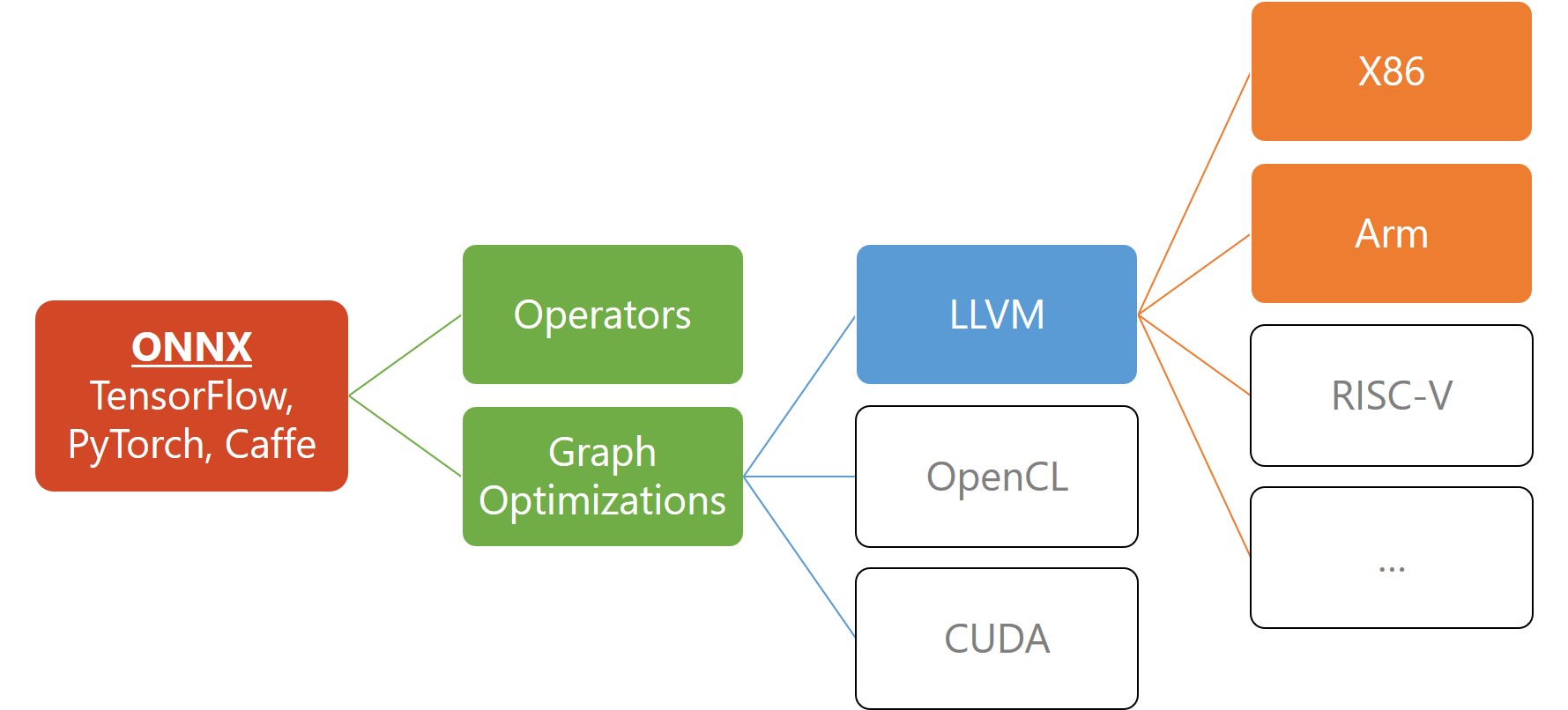

High level architecture

Front End

This part of the design produces LLVM 8.0 IR (Internal Representation) without regard to accelerator specific optimization, which are handled in the back-end support for each device individually.

ONNX support

While, ONNX has two official ONNX variants;

- The neural-network-only ONNX and

- it’s classical Machine Learning extension, ONNX-ML.

DNNC supports neural-network-only ONNX with support for tensors as input and output types (no support for sequences and maps)